This is the multi-page printable view of this section. Click here to print.

6.6 ModuleControllerV2 Technical Documentation

1 - 6.6.1 ModuleControllerV2 Architecture

Brief Introduction

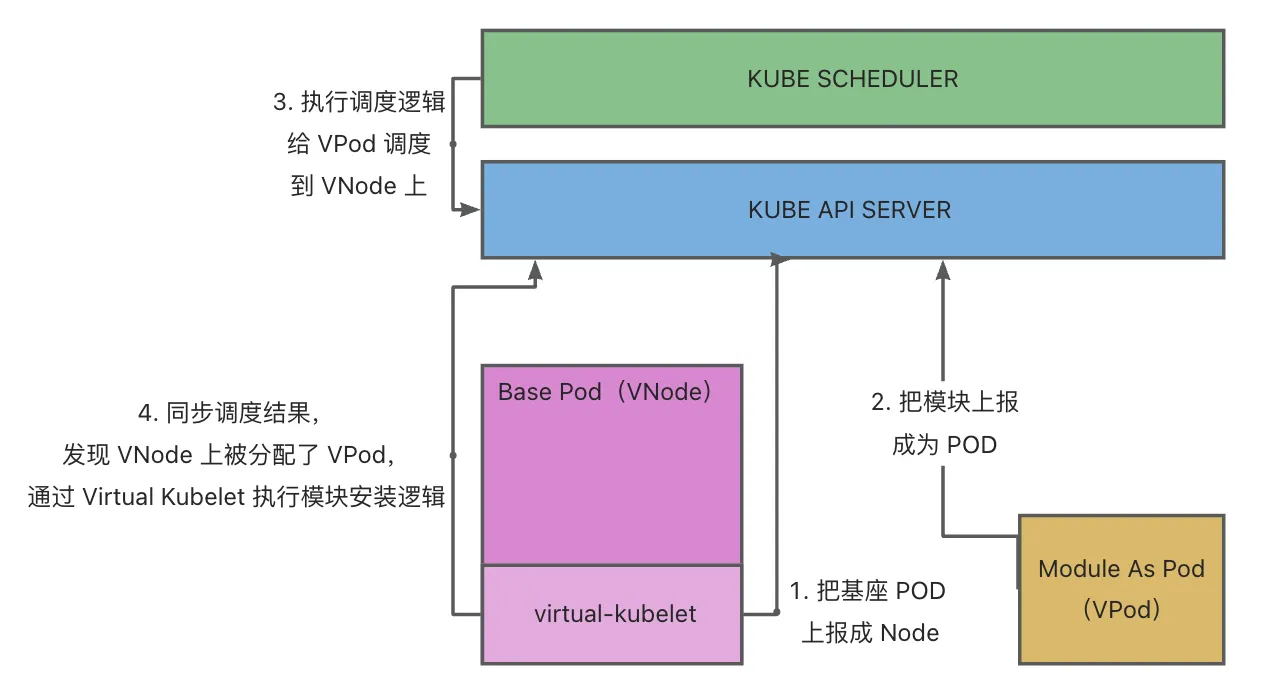

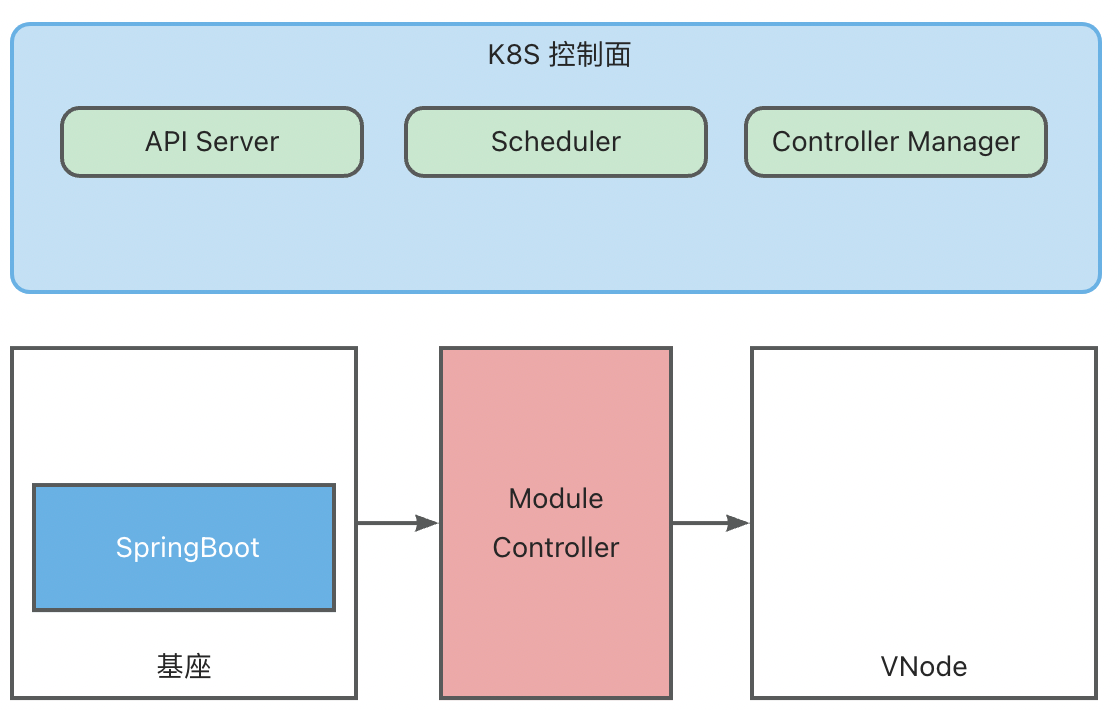

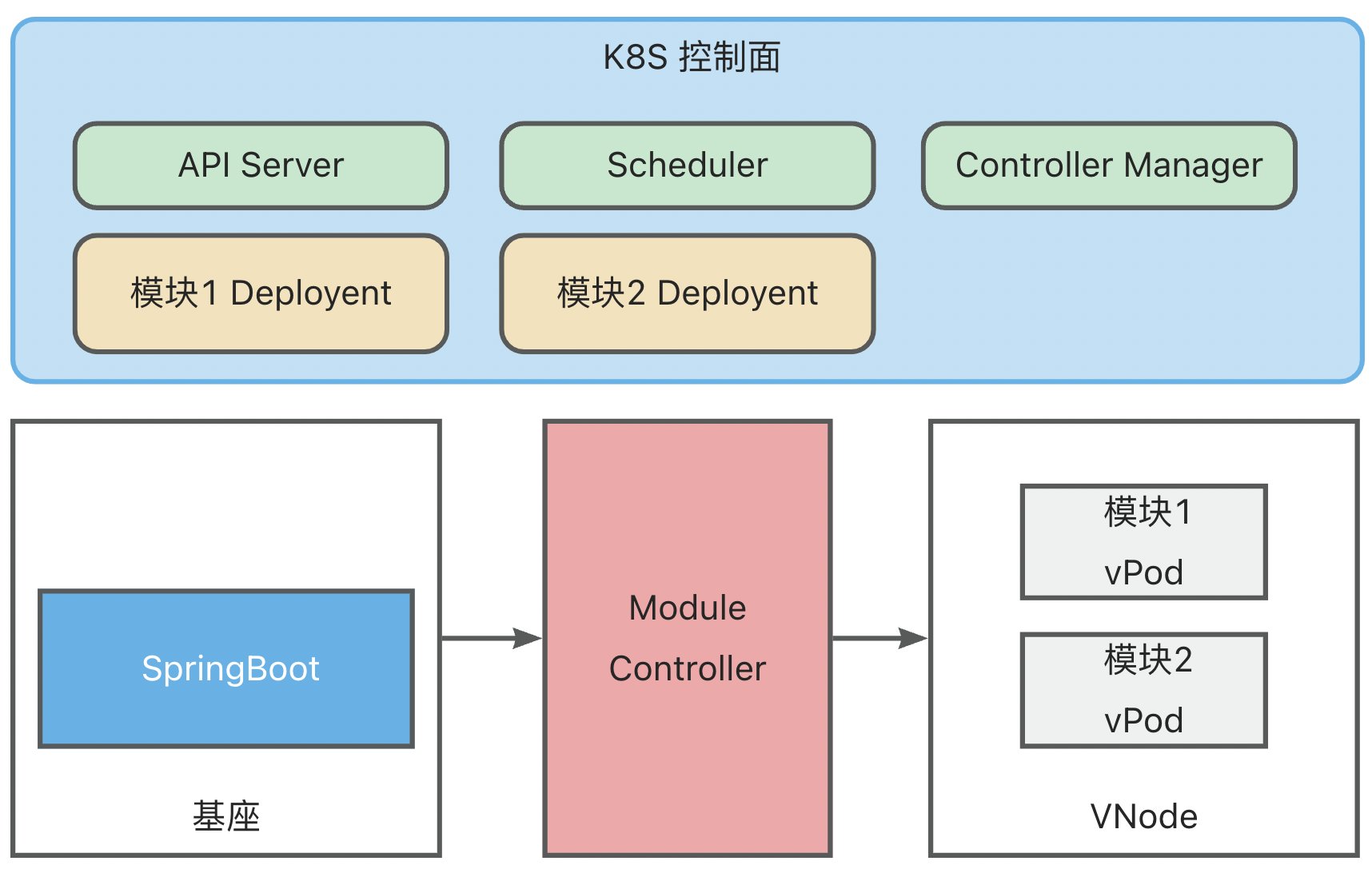

ModuleControllerV2 is a K8S control plane component based on the capabilities of Virtual Kubelet. It disguises the base as a node in the K8S system and maps the Module as a Container in the K8S system, thereby mapping Module operations to Pod operations. Utilizing K8S’s Pod lifecycle management, scheduling, and existing controllers like Deployment, DaemonSet, and Service, it achieves second-level Serverless Module operation scheduling and base interaction capabilities.

Background

The original Module Controller (hereafter referred to as MC) was designed based on K8S Operator technology.

In this mode, the original MC logically defines a separate Module control panel isolated from the base, handling operations for the base using K8S’s native capabilities and Module operations through Operator-encapsulated logic.

While this method logically distinguishes between Module and base concepts, it also presents certain limitations:

Modules are abstracted differently from the base model. Therefore, the original MC not only needs to load/unload Modules on the base but also:

- Be aware of all current bases

- Maintain base status (online status, Module loading, Module load, etc.)

- Maintain Module status (online status, etc.)

- Implement appropriate Module scheduling logic as required

This results in high development and maintenance costs. (High cost for Operator development per scenario)

Horizontal expansion of Module capabilities and roles is difficult. This implementation method is logically incompatible with traditional microservices architectures, where roles among services are similar. However, in the Operator implementation, Module and base abstraction levels differ, hindering interoperability. For example, in Koupleless’s proposal: “Modules can either attach to the base or run independently as services.” In the Operator architecture, achieving the latter requires custom scheduling logic and specific resource maintenance, leading to high development and maintenance costs for each new capability/role.

In this architecture, Module becomes a new concept, increasing learning costs for users from a product perspective.

Architecture

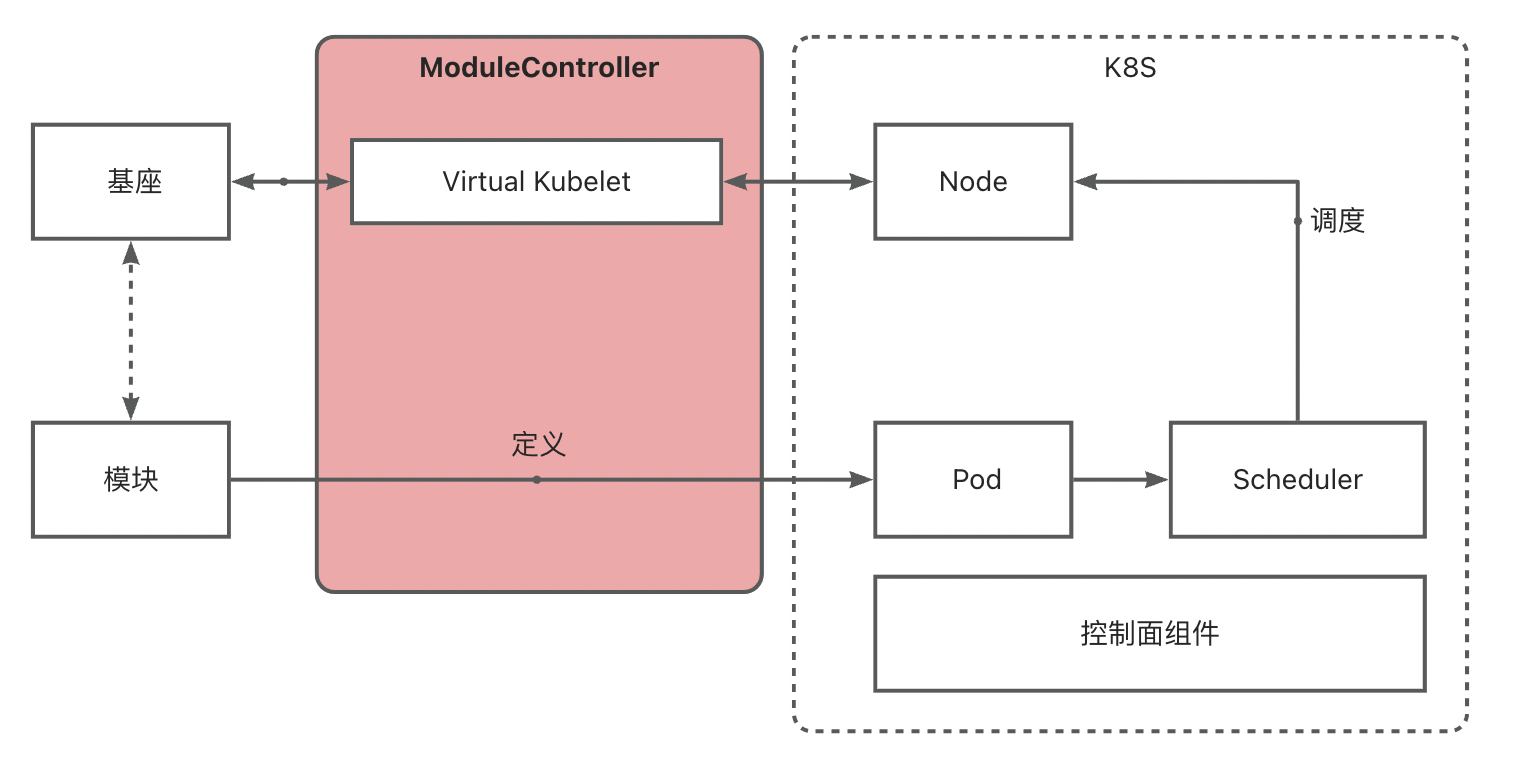

ModuleControllerV2 currently includes the Virtual Kubelet Manager control plane component and the Virtual Kubelet component. The Virtual Kubelet component is the core of Module Controller V2, responsible for mapping base services as nodes and maintaining Pod states on them. The Manager maintains base-related information, monitors base online/offline status, and maintains the basic runtime environment for the Virtual Kubelet component.

Virtual Kubelet

Virtual Kubelet is implemented with reference to the official documentation.

In summary, VK is a programmable Kubelet.

Just like in programming languages, VK is a Kubelet interface that defines a set of Kubelet standards. By implementing this VK interface, we can create our own Kubelet.

The Kubelet originally running on nodes in K8S is an implementation of VK, enabling K8S control plane to utilize and monitor physical resources on nodes by implementing abstract methods in VK.

Therefore, VK has the capability to masquerade as a Node. To distinguish between traditional Nodes and VK-masqueraded Nodes, we call VK-masqueraded Nodes as VNodes.

Logical Structure

In the Koupleless architecture, base services run in Pods, scheduled and maintained by K8S, and run on actual nodes.

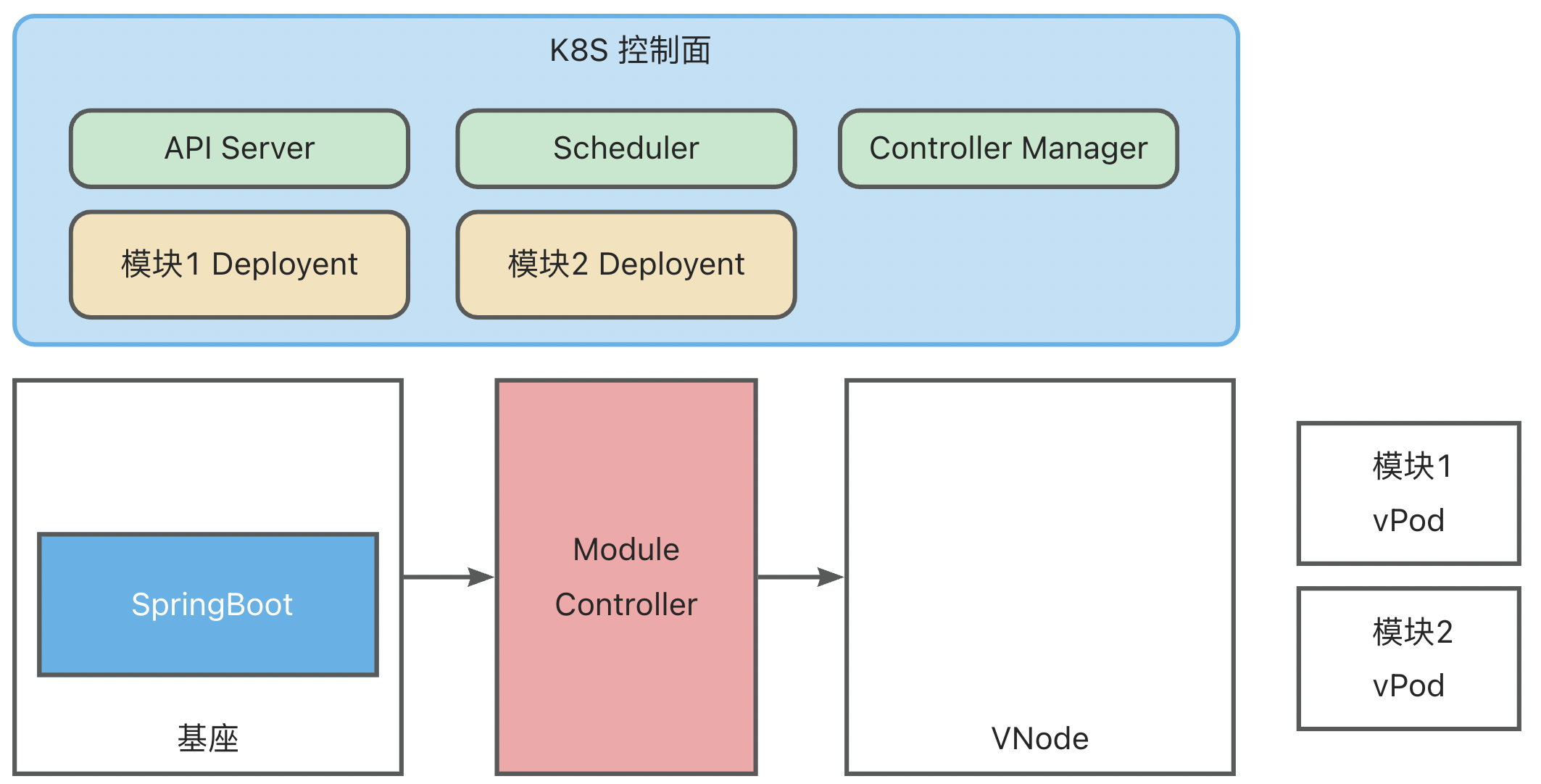

Module scheduling needs align with base scheduling. Thus, in MC V2 design, VK is used to disguise base services as traditional K8S Nodes, becoming base VNodes, while Modules are disguised as Pods, becoming module VPods. This logically abstracts a second layer of K8S to manage VNodes and VPods.

In summary, the overall architecture includes two logical K8S:

- Base K8S: Maintains real Nodes (virtual/physical machines), responsible for scheduling base Pods to real Nodes.

- Module K8S: Maintains virtual VNodes (base Pods), responsible for scheduling module VPods to virtual VNodes.

These are called logical K8S because they do not necessarily need to be two separate K8S. With good isolation, the same K8S can perform both tasks.

This abstraction allows utilizing K8S’s native scheduling and management capabilities without extra framework development, achieving:

- Management of base VNodes (not a core capability since they are already Pods in the underlying K8S but contain more information as Nodes)

- Management of VPods (core capability: including Module operations, Module scheduling, Module lifecycle status maintenance, etc.)

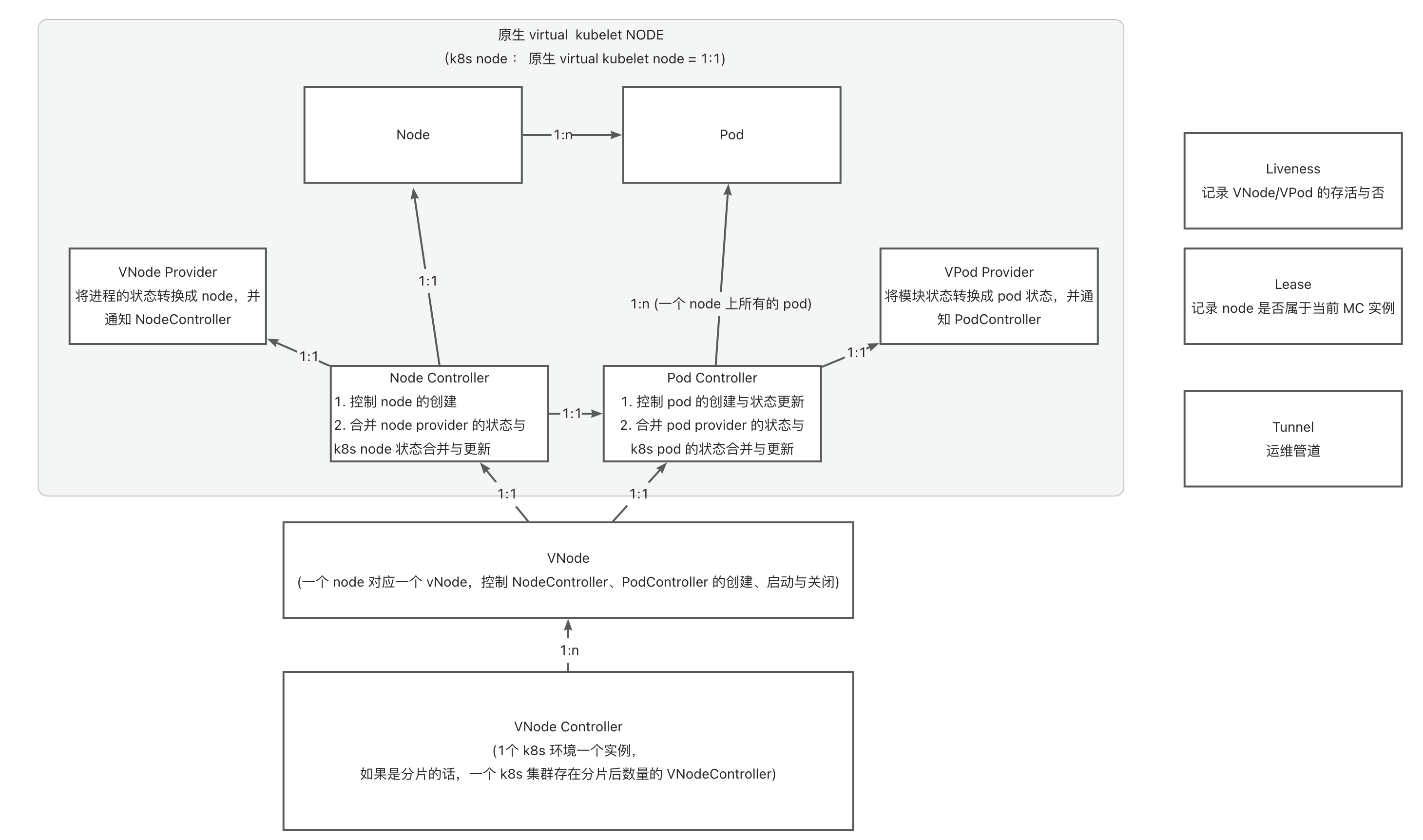

Multi-Tenant VK Architecture

Native VK uses K8S’s Informer mechanism and ListWatch to monitor pod events on the current VNode. This means each VNode requires its own monitoring logic. As the number of bases increases, API Server pressure grows rapidly, hindering horizontal scaling.

To solve this, Module Controller V2 extracts the ListWatch part of Virtual Kubelet, monitors events of specific Pods (those with certain labels in implementation), and forwards them to logical VNodes through in-process communication, reusing Informer resources. This way, each VNode only maintains local context without separate monitoring, reducing API Server pressure.

In the multi-tenant architecture, Module Controller V2 includes two core Modules:

- Base Registration Center: Discovers base services via a specific operations pipeline and maintains VK context and data transmission.

- VK: Maintains mappings between a specific base and node/pod, maintains node/pod states, and translates pod operations into corresponding Module operations for the base.

Sharded Architecture

A single Module Controller lacks disaster recovery capabilities and has an obvious upper limit. Thus, Module Controller V2 requires a more stable architecture with disaster recovery and horizontal scaling capabilities.

In Module operations, the core concern is the stability of scheduling capabilities. Under the current Module Controller architecture, scheduling stability consists of two parts:

- Stability of the dependent K8S

- Base stability

The first point cannot be guaranteed at the Module Controller layer, so high availability of the Module Controller focuses only on base-level stability.

Additionally, Module Controller’s load mainly involves monitoring and processing various Pod events, related to the number of Pods and bases under control. Due to K8S API Server’s rate limits on a single client, a single Module Controller instance has an upper limit on simultaneous event processing, necessitating load sharding capabilities at the Module Controller level.

Thus, the sharded architecture of Module Controller addresses two core issues:

- High availability of the base

- Load balancing of Pod events

In the Module Controller scenario, Pod events are strongly bound to the base, making load balancing of Pod events equivalent to balancing the managed base.

To address the above issues, Module Controller builds native sharding capability on multi-tenant Virtual Kubelet. The logic is as follows:

- Each Module Controller instance listens to the online information of all bases.

- Upon detecting a base going online, each Module Controller creates corresponding VNode data and attempts to create a VNode node lease.

- Due to naming conflicts of resources in K8S, only one Module Controller instance can successfully create a Lease, making its VNode the primary instance, while others become replicas, monitoring the Lease object and attempting to regain the primary role, achieving VNode high availability.

- Once VNode successfully starts, it listens to Pods scheduled on it for interaction, while unsuccessful VNodes ignore these events, achieving load sharding for the Module Controller.

Thus, the architecture forms: multiple Module Controllers shard VNode loads based on Lease, and multiple Module Controllers achieve VNode high availability through multiple VNode data.

Furthermore, we aim for load balancing among Module Controllers, with approximately balanced numbers of bases for each.

To facilitate open-source users and reduce learning costs, we implemented a self-balancing capability based on K8S without introducing additional components:

- Each Module Controller instance maintains its current workload, calculated as (number of VNodes currently managed / total number of VNodes). For example, if a Module Controller manages 3 VNodes out of 10, the actual workload is 3/10 = 0.3.

- Upon starting, Module Controllers can specify a maximum workload level. The workload is divided into segments based on this parameter. For example, if the maximum workload level is set to 10, each workload level contains 1/10 of the range, i.e., workload 0-0.1 is defined as workload=0, 0.1-0.2 as workload=1, and so on.

- In a sharded cluster configuration, before attempting to create a Lease, a Module Controller calculates its current workload level and waits according to the level. In this scenario, low workload Module Controllers attempt creation earlier, increasing success probability, achieving load balancing.

The process relies on K8S event broadcast mechanisms, with additional considerations depending on the operations pipeline selected during initial base onboarding:

- MQTT Operations Pipeline: Since MQTT inherently supports broadcasting, all Module Controller instances receive MQTT onboarding messages without additional configuration.

- HTTP Operations Pipeline: Due to HTTP’s nature, a base only interacts with a specific Module Controller instance during onboarding, requiring other capabilities to achieve initial load balancing. In actual deployment, multiple Module Controllers are served through a proxy (K8S Service/Nginx, etc.), allowing load balancing strategies to be configured at the proxy layer for initial onboarding balance.

2 - 6.6.2 ModuleControllerV2 Scheduling Principles

Brief Introduction

Module Controller V2 leverages the multi-tenant capabilities of Virtual Kubelet to map bases as Nodes in K8S. By defining modules as Pods, it reuses the K8S scheduler and various controllers to quickly build module operation and scheduling capabilities.

Base <-> VNode Mapping

Module Controller V2 implements base discovery through Tunnel, mapping it as a Node via Virtual Kubelet. Such Nodes are referred to as VNodes.

Upon base discovery, the configured Metadata and Network information are read. Metadata includes Name and Version, while Network includes IP and Hostname.

Metadata becomes Label information on the VNode to identify base details. Network information becomes the VNode’s network configuration. Future module pods scheduled onto the base will inherit the VNode’s IP for configuring Services, etc.

A VNode will also contain the following key information:

apiVersion: v1

kind: Node

metadata:

labels:

virtual-kubelet.koupleless.io/component: vnode # vnode marker

virtual-kubelet.koupleless.io/env: dev # vnode environment marker

base.koupleless.io/name: base # Name from base Metadata configuration

vnode.koupleless.io/tunnel: mqtt_tunnel_provider # Current tunnel ownership of the base

base.koupleless.io/version: 1.0.0 # Base version number

name: vnode.2ce92dca-032e-4956-bc91-27b43406dad2 # vnode name, latter part is UUID from the base maintenance pipeline

spec:

taints:

- effect: NoExecute

key: schedule.koupleless.io/virtual-node # vnode taint to prevent regular pod scheduling

value: "True"

- effect: NoExecute

key: schedule.koupleless.io/node-env # node env taint to prevent non-current environment pod scheduling

value: dev

status:

addresses:

- address: 127.0.0.1

type: InternalIP

- address: local

type: Hostname

Module <-> Pod Mapping

Module Controller V2 defines a module as a Pod in the K8S system, allowing for rich scheduling capabilities through Pod YAML configuration.

A module Pod YAML configuration is as follows:

apiVersion: v1

kind: Pod

metadata:

name: test-single-module-biz1

labels:

virtual-kubelet.koupleless.io/component: module # Necessary to declare pod type for module controller management

spec:

containers:

- name: biz1 # Module name, must strictly match the artifactId in the module's pom

image: https://serverless-opensource.oss-cn-shanghai.aliyuncs.com/module-packages/stable/biz1-web-single-host-0.0.1-SNAPSHOT-ark-biz.jar # jar package address, supports local file, http/https link

env:

- name: BIZ_VERSION # Module version configuration

value: 0.0.1-SNAPSHOT # Must strictly match the version in the pom

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms: # Base node selection

- matchExpressions:

- key: base.koupleless.io/version # Base version filtering

operator: In

values:

- 1.0.0 # Module may only be schedulable to certain versions of bases; if restricted, this field is required.

- key: base.koupleless.io/name # Base name filtering

operator: In

values:

- base # Module may only be schedulable to certain specific bases; if restricted, this field is required.

tolerations:

- key: "schedule.koupleless.io/virtual-node" # Ensure the module can be scheduled onto a base vnode

operator: "Equal"

value: "True"

effect: "NoExecute"

- key: "schedule.koupleless.io/node-env" # Ensure the module can be scheduled onto a base node in a specific environment

operator: "Equal"

value: "test"

effect: "NoExecute"

The above example shows only the basic configuration. Additional configurations can be added to achieve richer scheduling capabilities, such as adding Pod AntiAffinity in Module Deployment scenarios to prevent duplicate module installations.

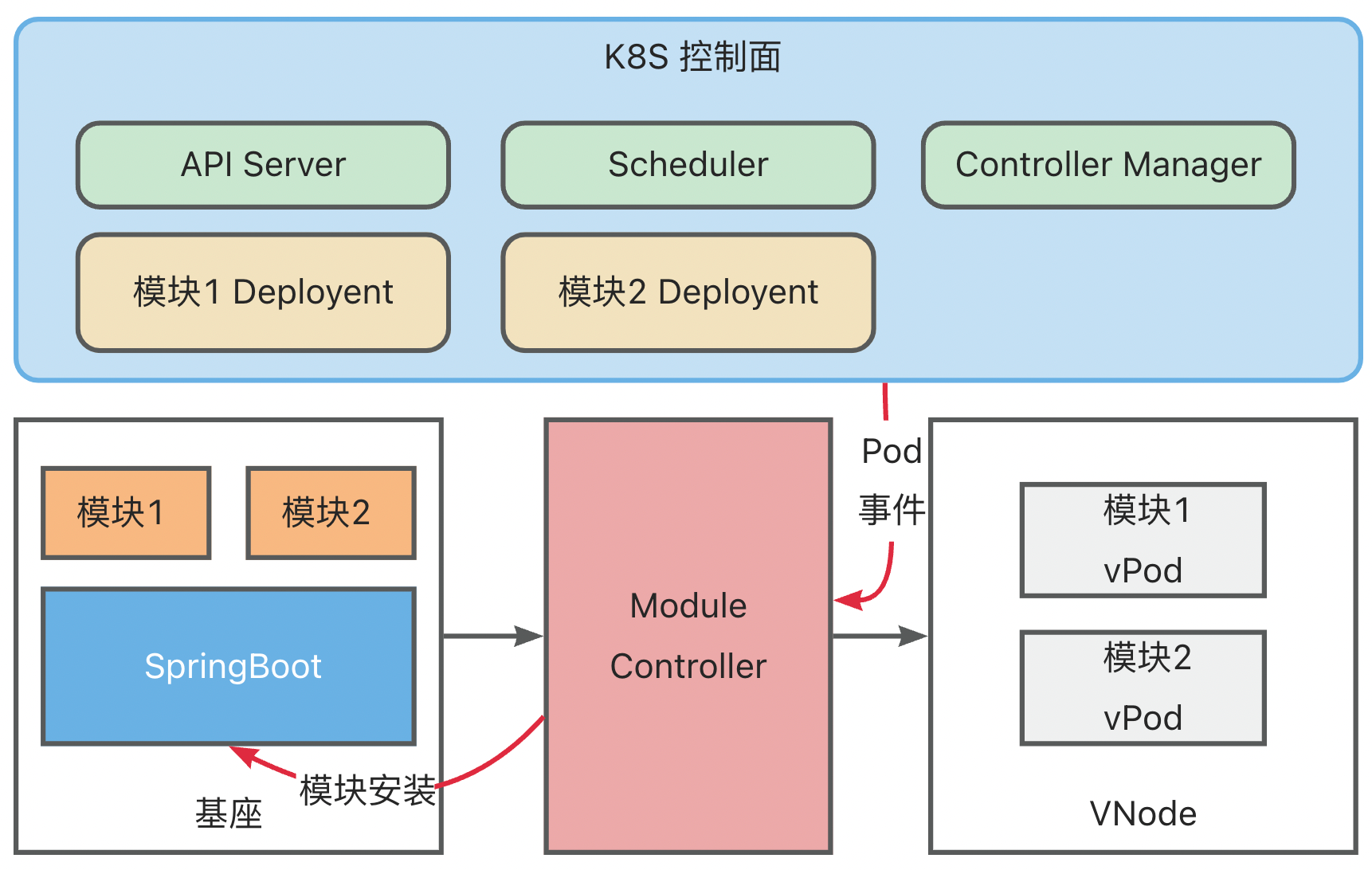

Operations Workflow

Based on the above structure and mapping relationships, we can leverage Kubernetes (K8S) native control plane components to fulfill diverse and complex module operation requirements.

The following illustrates the entire module operations workflow using the Deployment module as an example, with the base already initialized and mapped:

- Create the Module Deployment (a native K8S Deployment, where the PodSpec within the Template defines module information). The Deployment Controller in the K8S ControllerManager will create a virtual Pod (vPod) according to the Deployment configuration. At this point, the vPod wasn’t scheduled,with a status of Pending.

- K8S Scheduler scans unscheduled vPods and schedules them onto appropriate virtual Nodes (vNodes) based on selector, affinity, taint/toleration configurations.

- Upon detecting the completion of vPod scheduling, the Module Controller retrieves the module information defined within the vPod and sends installation commands to the base.

After the base completes the module installation, it synchronizes the module installation status with the Module Controller, which then translates the module status into Container Status and syncs it with Kubernetes.

Concurrently, the base continuously reports its health status. The Module Controller maps Metaspace capacity and usage to Node Memory, updating this information in Kubernetes.

Implementation Logic

The core logic involved in the implementation is as follows:

Model definition and logical relationships:

how to debug

- start module-controller test version in minikube,

serverless-registry.cn-shanghai.cr.aliyuncs.com/opensource/test/module-controller-v2:v2.1.4, the image has been configured with go-delve remote debug environment, debug port is 2345

apiVersion: apps/v1

kind: Deployment

metadata:

name: module-controller

spec:

replicas: 1

selector:

matchLabels:

app: module-controller

template:

metadata:

labels:

app: module-controller

spec:

serviceAccountName: virtual-kubelet # 上一步中配置好的 Service Account

containers:

- name: module-controller

image: serverless-registry.cn-shanghai.cr.aliyuncs.com/opensource/test/module-controller-v2:v2.1.4 # 已经打包好的镜像,镜像在 Module-controller 根目录的 debug.Dockerfile

imagePullPolicy: Always

resources:

limits:

cpu: "1000m"

memory: "400Mi"

ports:

- name: httptunnel

containerPort: 7777

- name: debug

containerPort: 2345

env:

- name: ENABLE_HTTP_TUNNEL

value: "true"

- Log in to the started container

kubectl exec module-controller-544c965c78-mp758 -it -- /bin/sh

- Enter the container and start delve

dlv --listen=:2345 --headless=true --api-version=2 --accept-multiclient exec ./module_controller

- Exit the container and set up port forwarding for port 2345

kubectl port-forward module-controller-76bdbcdd8d-fhvfd 2345:2345

- Start remote debugging in Goland or IDEA, with Host as

localhostand Port as2345.

3 - 6.6.3 Core Process Timeline

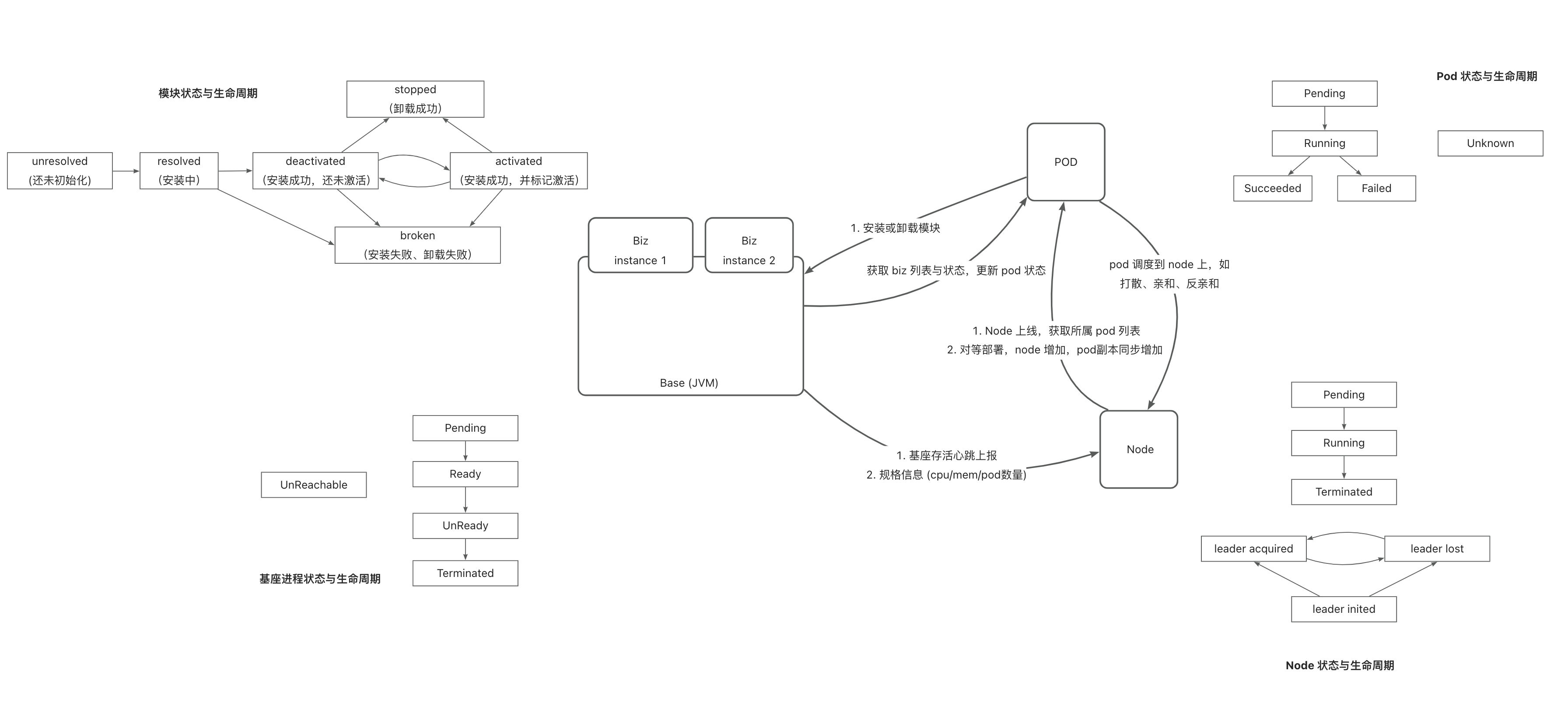

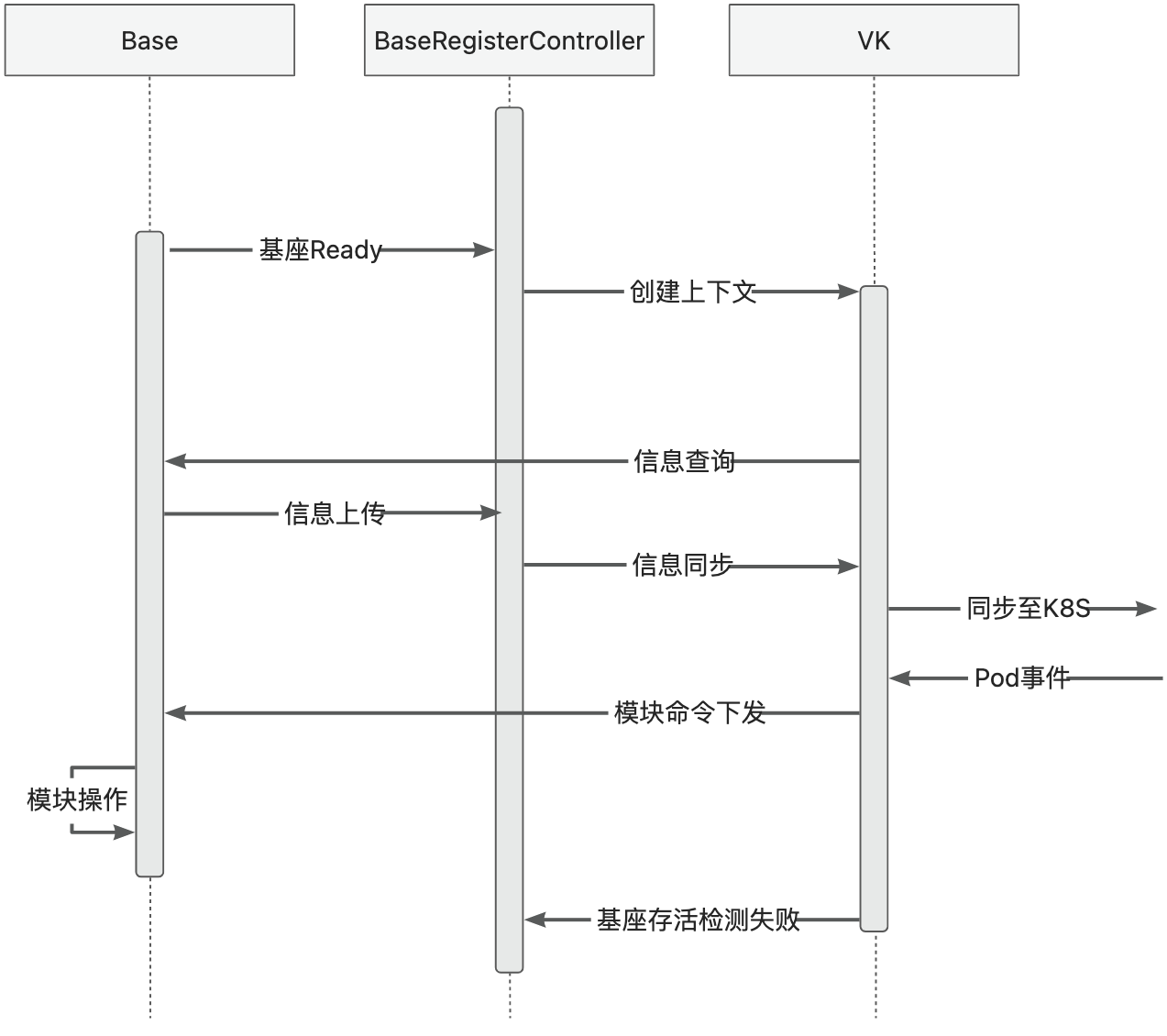

Base Lifecycle

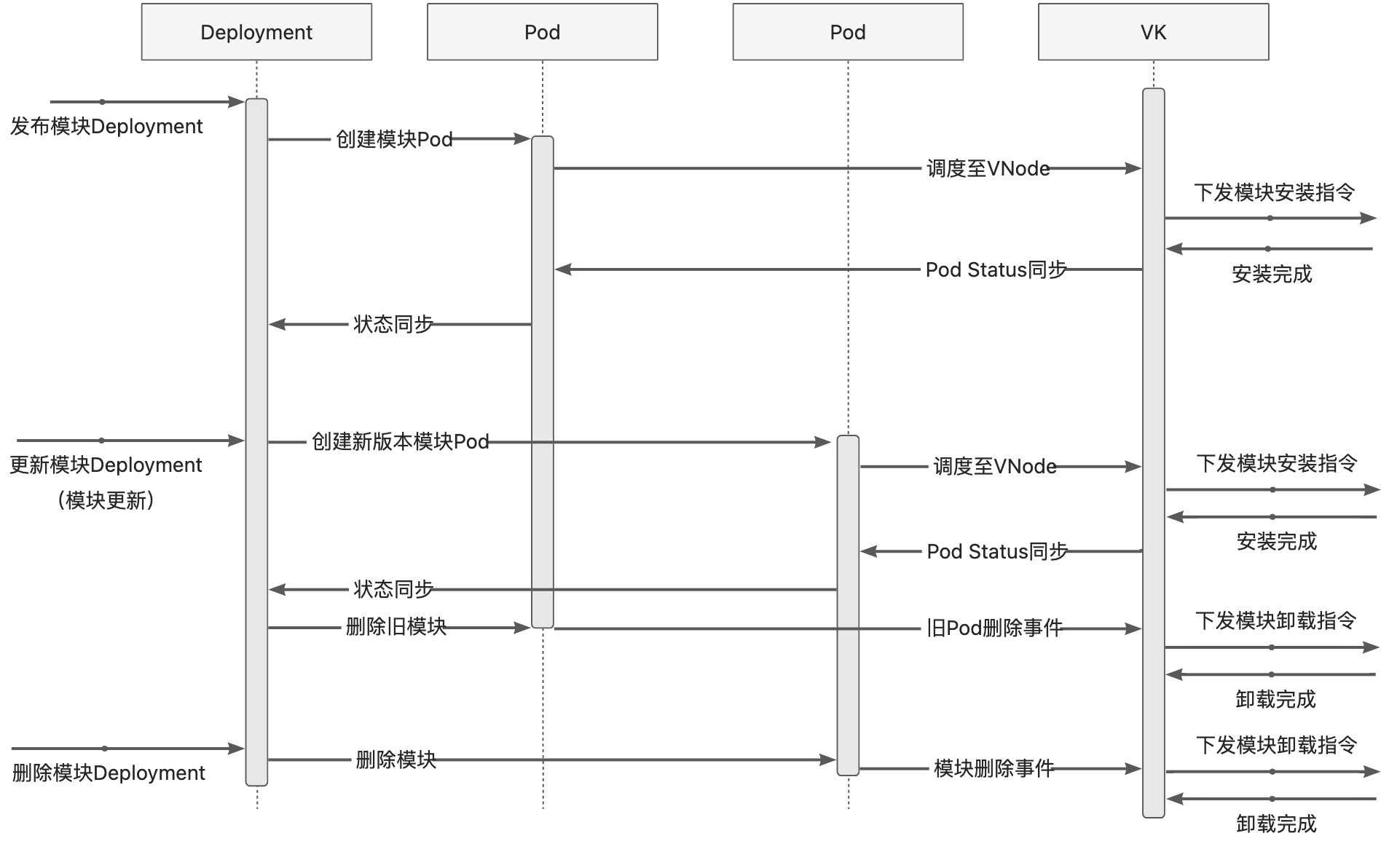

Module Release and O&M

4 - 6.6.4 Kubelet Proxy

Kubelet Proxy

The Kubelet Proxy is an enhanced feature of Module Controller V2 on the K8s side.

It allows users to interact directly with Module Controller V2 using the kubectl tool,

providing an operational experience similar to the native K8s Kubelet.

Logs command schematic

Iteration Plan

The adaptation will be carried out in two phases:

- Use the proxy solution to provide logs capability for modules deployed in the Pod base -> Completed

- Ensure semantic consistency and implement logs capability through tunnel or arklet for smooth transition -> * Planned*

Notes

Currently, only the logs capability is implemented, and the base must be deployed in the K8s cluster.